The Oracle, the Library, and the Test

How the hallucinations and memories of artificial intelligence reflect our own obsessions with certainty

On the slopes of Mount Parnassus, at the foot of the temple of Apollo, men flocked to the oracle in search of certainty. There, in a space saturated with incense and mystery, the voice of the priestess Pythia descended like a divine revelation. But her words were always double, like mirrors facing each other: they said and unsaid, promised and warned at the same time. King Croesus, before launching his attack on the Persians, received the famous reply:

‘If you cross the river Halis, you will destroy a great empire.’

He thought he heard the promise of victory, when in fact the empire destined to fall was his own. The oracle spoke with the certainty of one who knows the future, yet left the interpretation—and the error—to the human ear.

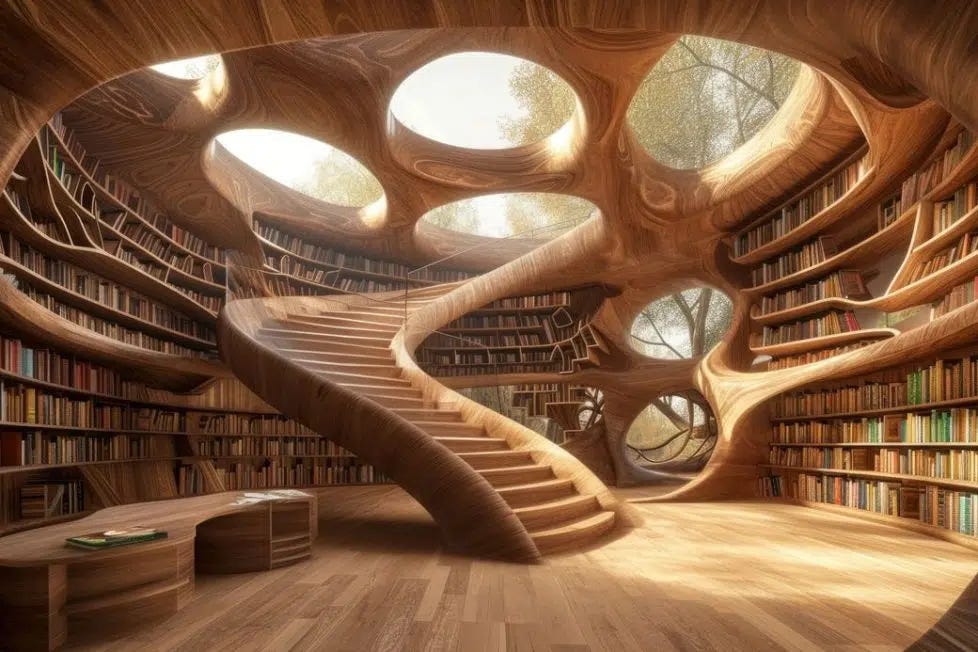

Many centuries later, Borges imagined another form of this ambiguity in The Library of Babel: a delirious universe containing all possible books, all combinations of letters, all true and false sentences. There, a volume revealing the exact date of a battle was surrounded by infinite volumes narrating incorrect dates with identical aplomb. The reader was immersed in an ocean of plausibilities, unable to distinguish the spark of truth amid the unfathomable noise.

And, on a more domestic level, anyone who has ever taken a school exam knows the same game. Faced with an impossible question, the student writes an invented answer on the page, in confident handwriting, as if confidence could redeem a lack of knowledge. In the relentless logic of marks and grades, taking a risk is better than keeping quiet; silence is punished, conjecture rewarded.

The oracle, the library and the exam: three scenes that, without meaning to, anticipate the present. Our language models speak with the same ambiguous voice as Delphi, generate infinite libraries of phrases as in Babel, and respond like schoolchildren eager to please, always risking a guess rather than admitting ignorance. We have called this phenomenon ‘hallucination’, as if it were a human delusion. But the word is misleading: there are no ghosts or visions here, but rather a cold calculation that privileges the appearance of certainty over the honesty of emptiness.

Machines do not hallucinate because they are capricious, but because we have trained them to do so. In their training, as in exams, audacity is rewarded and prudence is punished. A system that responded ‘I don't know’ would be dismissed as mediocre, even though it was actually the most sensible. That is why, when a model does not have enough information, it does not shrug its shoulders: it invents. And it does so with the solemnity of Pythia, with the conviction of a text printed in the Library of Babel, with the firmness of a student who risks the date of a war.

The result is disturbing. When asked about the biography of a stranger, the model offers specific dates and precise locations, as if it had been there. It has not. What we see is the statistical effect of learning that turns doubt into feigned certainty. And most revealingly, this deception is not an accidental error, but the optimal strategy within the game we have proposed.

At the other extreme lies memory. It is often imagined that these intelligences store the entire ocean of the internet within them, like limitless sponges. The reality is more modest and more surprising: their memory is finite, measurable, almost mathematical. Each parameter of these colossal models can store only a few bits, as if each were a tiny file cell. Millions and millions of cells, yes, but with precise capacity. And what happens during learning is that these cells fill up, first with the voracity of a student memorising lists, and then, when saturated, with something akin to understanding: the model begins to grasp general patterns and stops retaining isolated examples.

It is in that transition—from memory to generalisation, from repetition to pattern—that a strange mirage appears. Because a model can recite a rare piece of data if it stored it intact, or it can invent a new one that fits the form of what it saw before. In both cases, it speaks with equal conviction. And the listener, like King Croesus, must decide whether to trust that voice.

What these experiments ultimately give us is a portrait of ourselves. Don't we do the same thing? We memorise eagerly, improvise when our memory fails us, generalise when we can't remember. We prefer certainty, even when it is false, to the emptiness of ‘I don't know’. We punish doubt in exams, in debates, in politics. It is not surprising that we have built machines in our own image: oracles that always respond, even when they don't know.

The question that arises is uncomfortable: do we want intelligences that dazzle us with certainties, or intelligences that have the courage to remain silent? The brilliance of the oracle, the infinity of the library and the audacity of the student seduce us. But perhaps true progress lies elsewhere: in designing systems that value modesty, that recognise their gaps, that learn to say “I don't know”.

Borges wrote that the Library of Babel was a monstrous reflection of the universe. Perhaps these models are too, not because they contain all possible truths and falsehoods, but because they reflect our own inability to inhabit uncertainty. Like the king who marched confidently towards his ruin, like the student who answers with aplomb a question he does not understand, like the reader who gets lost in endless corridors of plausible books, we too can be spellbound by the voice of the machine. And perhaps the most profound lesson is not to perfect it, but to learn to listen to it with suspicion, to interpret its ambiguity, to read in it what it says and what it does not say.

Because artificial intelligence, in the end, is not an infallible oracle, nor a complete library, nor a perfect student. It is merely a mirror, multiplied and distorted, in which we are forced to contemplate the fragility of our own ways of knowing.

We’ll see.