The dawn of humanoid robots: AI's next frontier

How foundation models and imitation learning are revolutionizing robotics in 2024

For decades, roboticists have more or less focused on controlling robots’ “bodies”—their arms, legs, levers, wheels, and the like—via purpose-driven software. But a new generation of scientists and inventors believes that the previously missing ingredient of AI can give robots the ability to learn new skills and adapt to new environments faster than ever before. This new approach, just maybe, can finally bring robots out of the factory and into our homes.

If you follow robotics at all you may have noticed that there has been an explosion of humanoid robot startups. What's with that? Why humanoids? Why now?

tries to create a timeline out of his own conclusions:2018: Boston Dynamics releases their first parkour video. Bipedal motion works well enough to count on.

2019: Convolutional deep neural networks make perception (object detection/localization) work well enough to do manipulation.

2022: ChatGPT3 convinces everyone that natural language is going to work well enough to power actual products.

2023: Tesla releases videos of their humanoid robots. VCs notice and funding for humanoids is suddenly much more attainable.

2024: Behavior cloning for manipulation starts to work well enough for tech demos and videos.

2023 has witnessed a tsunami of progress in the field of robotics, largely driven by the proliferation of Foundation Models. This advancement has been not only technological but also philosophical in terms of research.

From a technological standpoint, we've seen how breakthroughs in robotics have incorporated specific models like GPT-3, PaLI, and PaLM. Additionally, learning algorithms and architectural components such as self-attention and diffusion have been adopted, leveraging underlying datasets and infrastructures like VQA and CV.

But perhaps the most exciting aspect has been the mindset shift in robotics research. This year marks a milestone in embracing the foundation modeling philosophy: a fervent belief in the power of scalability, diverse data sources, the importance of generalization, and emergent capabilities. For the first time, we're not just theorizing about applying these principles to robotics – we're actually putting them into practice.

How did we reach this exciting point?

It all began in 2022 when humanity discovered (or created) the closest thing to a magical alien artifact: Large Language Models (LLMs). These "artifacts" worked surprisingly well across various language reasoning domains, but we weren't quite sure how to apply them to robotics.

By mid-2022, it seemed we could keep the LLM in a controlled "box," assisting with planning and semantic reasoning, but its outputs were still high-level abstractions. At the end of this first act, it was decided that low-level control – the hard part of robotics – still needed to be developed in-house, perhaps inspired by the LLM but kept separate from its arcane workings.

This first contact between robotics and LLMs was intriguing and exciting, but not yet transformative. However, it laid the groundwork for the revolutionary advancements we've seen in 2023.

Next text is extracted from NVIDIA official page (March 2024):

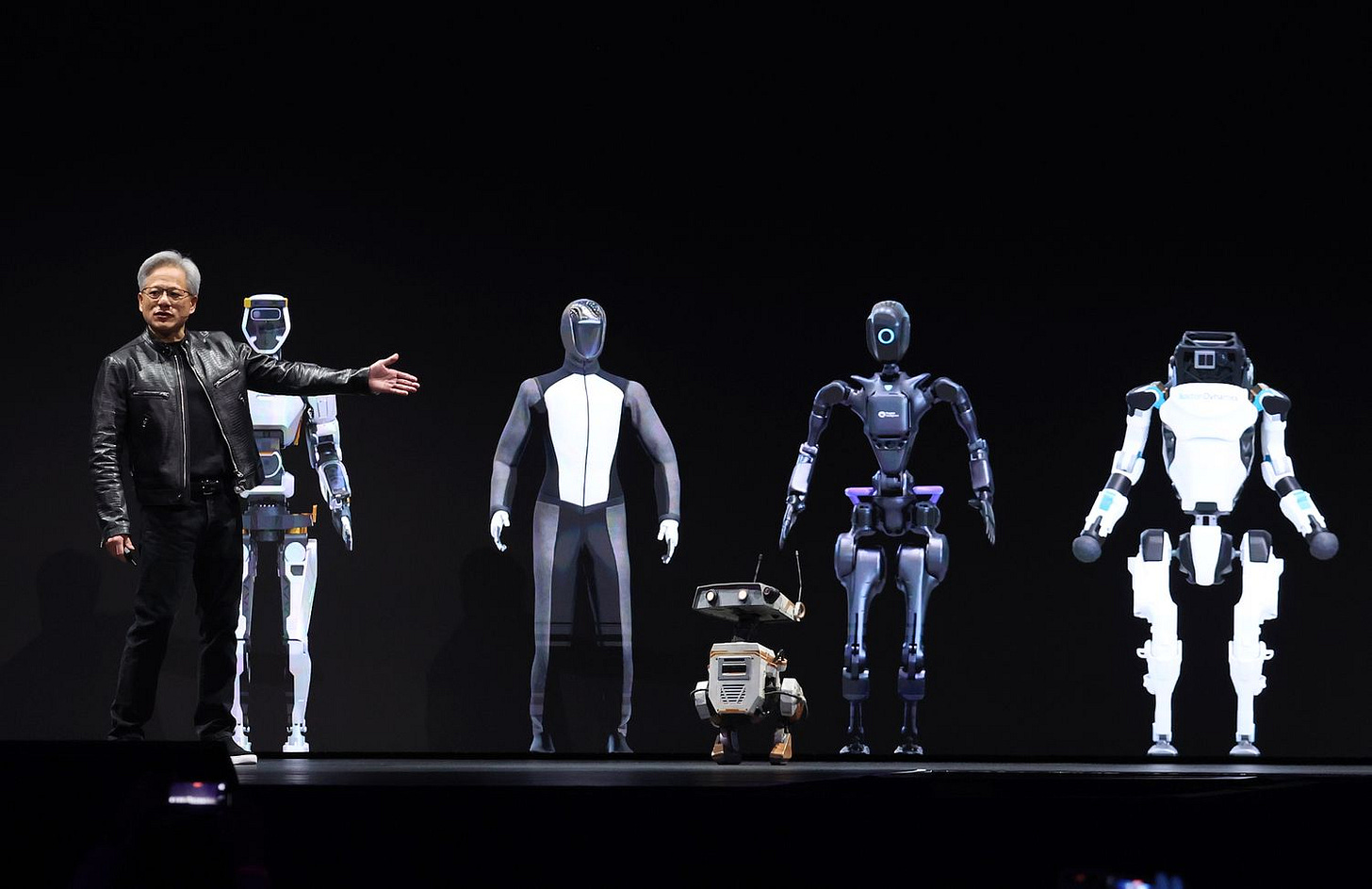

NVIDIA today announced Project GR00T, a general-purpose foundation model for humanoid robots, designed to further its work driving breakthroughs in robotics and embodied AI.

As part of the initiative, the company also unveiled a new computer, Jetson Thor, for humanoid robots based on the NVIDIA Thor system-on-a-chip (SoC), as well as significant upgrades to the NVIDIA Isaac™ robotics platform, including generative AI foundation models and tools for simulation and AI workflow infrastructure.

In other words, they are not going to build robots, but rather develop general-purpose foundation models for (humanoid) robots. That is, what they want is something like developing chips with software to be able to train robots more quickly, and for them to be capable of training for many tasks.

One of the biggest problems with robot training is that if this training is done with RL (reinforcement learning), it requires thousands and thousands of experiments, and this is extremely costly in terms of time.

Robots powered by GR00T, which stands for Generalist Robot 00 Technology, will be designed to understand natural language and emulate movements by observing human actions — quickly learning coordination, dexterity and other skills in order to navigate, adapt and interact with the real world.

Simply explained, NVIDIA is aiming to develop robot learning through what researchers call 'imitation learning'. It's not new as a technique, but the other alternative to RL for training robots and make them learning new tasks. In imitation learning, models learn to perform tasks by, for example, imitating the actions of a human teleoperating a robot or using a VR headset to collect data on a robot. It’s a technique that has gone in and out of fashion over decades but has recently become more popular with robots that do manipulation tasks.

So, NVIDIA is in fact trying to create some chips that consume low computational cost and permit robots from other manufacturers be able to learn general-purpose tasks easier. And in fact, I think that reduced energy consumption will be key for the hypothetical massification of robots in industries and society. According to

, these are the main trends for training robots with LLM, which can be found on his great blog:Actually, Google presented SayTap in July 2023 for communicating with a robot dog through command prompts. Tons of progress have been achieved since then.

Considering the required human-robotic interaction, it now makes sense to me Meta’s bid for their VR Ray-Ban Glasses, or Apple’s movements forward controlling robots with smartphones, which I think it’s the ultimate objective.

After GPT emerged, embodied AI became possible.

Humanoid robots may no longer need remote controls.

Over time, we can give commands to robots directly through voice.

The cost of training robots is lower than training humans.

This means that tens of millions or even hundreds of millions of blue-collar workers will simultaneously lose their comparative advantage.

It also represents a market worth tens of trillions or even hundreds of trillions.

With this prospect, venture capitalists will flock in.

So instead of focusing on the breakthrough in humanoid robots, we should focus on the end-to-end breakthrough in embodied AI.

In fact, looking at GPT-4 currently, our intelligence ceiling has already overflowed. Most embodied AI does not require a college-level standard.

What we lack is alignment technology and data. These all require money and time.

I feel that the GPT moment for embodied AI should be coming soon.

Optimistically speaking, a company like OpenAI for embodied AI has already emerged, but we just don't know which one it is. Perhaps by this time next year, we will welcome the GPT moment, and then begin exponential growth.

This approach reminds me of Brooks' famous paper "Elephants don't play chess" (https://www.sciencedirect.com/science/article/abs/pii/S0921889005800259) which contributed to changing the approach to AI, from GOFAI towards deep learning. It is probably a good path for robots to be exposed to all available sources of learning, not only interacting in the real world of laboratories but also "watching" real videos of humans moving through reality.