AI and the Hidden Patterns of Knowledge: Automating Scientific Discovery

How Artificial Intelligence Can Uncover Novel Connections and Accelerate Innovation Through Combinatorial Thinking

"Imagine that the pieces of a puzzle are independently designed and created, and that, when retrieved and assembled, they then reveal a pattern-undesigned, unintended, and never before seen, yet a pattern that commands interest and invites interpretation. So it is, I claim, that independently created pieces of knowledge can harbor an unseen, un-known, and unintended pattern." — Don R. Swanson

In the rapidly evolving landscape of artificial intelligence and robotics, one of the most intriguing applications is the potential to automate scientific discovery. The question isn't merely whether machines can generate hypotheses, but whether they can uncover valuable connections that human researchers miss due to the sheer volume of scientific literature. This exploration sits at the fascinating intersection of AI, information retrieval, and scientific innovation.

The Combinatorial Nature of Innovation

One compelling theory about innovation suggests that the most valuable discoveries emerge from novel combinations of existing ideas. As scientific research demonstrates, this isn't just philosophical speculation—there's substantial empirical evidence supporting this view.

This concept, known as "combinatorial innovation," suggests that new ideas are essentially novel configurations of pre-existing components. As author Matt Clancy explains, even Thomas Edison's famous lightbulb can be understood this way—Edison tested thousands of different materials combined with his bulb apparatus before finding a filament that worked. Once invented, the lightbulb itself became a component that could be combined with other technologies to create desk lamps, headlights, and more.

The scientific method as a cycle: Observation leads to research, hypothesis, testing, analysis, and reporting conclusions, which in turn feed new observations. Each stage represents an opportunity for AI to assist discovery.

If we assume science to be a completely closed system in which our model scientists are confined to windowless labs—their knowledge about the outside world derived only from a library of scientific papers—then the new observations made by these scientists would necessarily be dependent on the reported conclusions of other scientists.

But this is not close to reality. Scientists do, in fact, spend time outside of their windowless labs. They can make observations about the world which do not come from something they’ve read. They have friends and colleagues with whom they sometimes discuss these observations, and these discussions are usually not written down or published.

Despite these shortcomings, the simplicity of this agent-based model of science has appealing computational properties. Some combinatorial theories of innovation suggest that novel discoveries result from unique combinations of pre-existing concepts, so we may not even need to assume these scientists are competent; we just need enough of them to efficiently explore the combinatorial space of plausible hypotheses. Given the recent growth of natural language processing capabilities, this simplified model’s sole reliance on scientific text as the basis for new scientific observations prompts a question: could we automate this model of scientists and extract novel scientific observations from the scientific literature alone?

Combinatorial innovation

Two decades ago, Martin Weitzman, now deceased, outlined fascinating implications of combinatorial innovation models. In his 1998 work, Weitzman described innovation as combining two existing ideas or technologies which, with adequate R&D investment and fortunate circumstances, yields a new concept or technology. Weitzman illustrated this with Thomas Edison's search for an appropriate lightbulb filament material. Edison tested thousands of different materials in combination with his lightbulb design before discovering a successful match. This process isn't unique to the lightbulb—virtually any innovation can be viewed as a novel arrangement of previously existing components.

An important point is that once you successfully combine two components, the resulting new idea becomes a component you can combine with others. To stretch Weitzman’s lightbulb example, once the lightbulb had been invented, new inventions that use lightbulbs as a technological component could be invented: things like desk lamps, spotlights, headlights, and so on.

One of the first people to investigate the potential for automating scientific discovery was Don R. Swanson. An early computational linguist and information scientist, Swanson laid the foundations of what would become the field of literature-based discovery in a 1986 paper titled “Undiscovered Public Knowledge” (Swanson, 1986). In the paper, Swanson argues that the distributed and depth-first organisation of the scientific enterprise generates significant latent knowledge which, if properly retrieved and combined, could lead to new scientific discoveries.

Swanson developed the "ABC procedure" where existing relationships between concepts A↔B and B↔C could reveal undiscovered A↔C connections. His successful applications included linking fish oil to Raynaud's syndrome treatment, magnesium to migraine relief, and endurance athletics to atrial fibrillation risk—all later validated by clinical trials.

What makes Swanson's work particularly fascinating is the personal context behind his discoveries. As revealed in the literature, Swanson himself suffered from Raynaud's syndrome and frequent migraine headaches. Despite being an avid runner who completed a half-marathon at age 80, he experienced chronic atrial fibrillation that eventually led to strokes in 2007, ending both his running and scientific careers. His own health challenges likely guided his research interests—a reminder that even "objective" scientific discovery is often driven by personal experience.

The evolution from Swanson's manual methods to modern AI techniques represents a quantum leap in our ability to extract hidden knowledge.

Word Embedding and Vector Representations

Researchers like Tshitoyan et al. (2019) have shown that unsupervised learning algorithms can capture meaningful relationships between scientific concepts. By analyzing 3.3 million materials science abstracts published between 1922 and 2018, they created vector representations that successfully predicted future thermoelectric materials before they were experimentally confirmed.

One of the most remarkable findings was that without any prior scientific knowledge encoded, the Word2Vec model learned to perform meaningful vector arithmetic that matched real physical properties.

The Strange Dynamics of Combinatorial Innovation

One of the most fascinating aspects of combinatorial innovation is its peculiar growth pattern. Martin Weitzman demonstrated in 1998 that combinatorial processes start slowly but eventually explode in productivity.

To illustrate this concept, let me contrast a simple but powerful example: Start with 100 ideas. The possible unique pairs that can be created is 4,950. If just 1% of these combinations yield viable new ideas, we would have 49 new ideas. Now we have 149 ideas, which can form 11,026 possible pairs. After removing the 4,950 we've already investigated, there are 6,076 new combinations to explore. If 1% of these are viable, we add 61 more ideas. The process continues, with each iteration producing more and more ideas.

The growth of ideas via combinatorial innovation: At first, growth resembles an exponential process, but by period 6, an explosion occurs where new ideas in each period dwarf all previous accumulated knowledge.

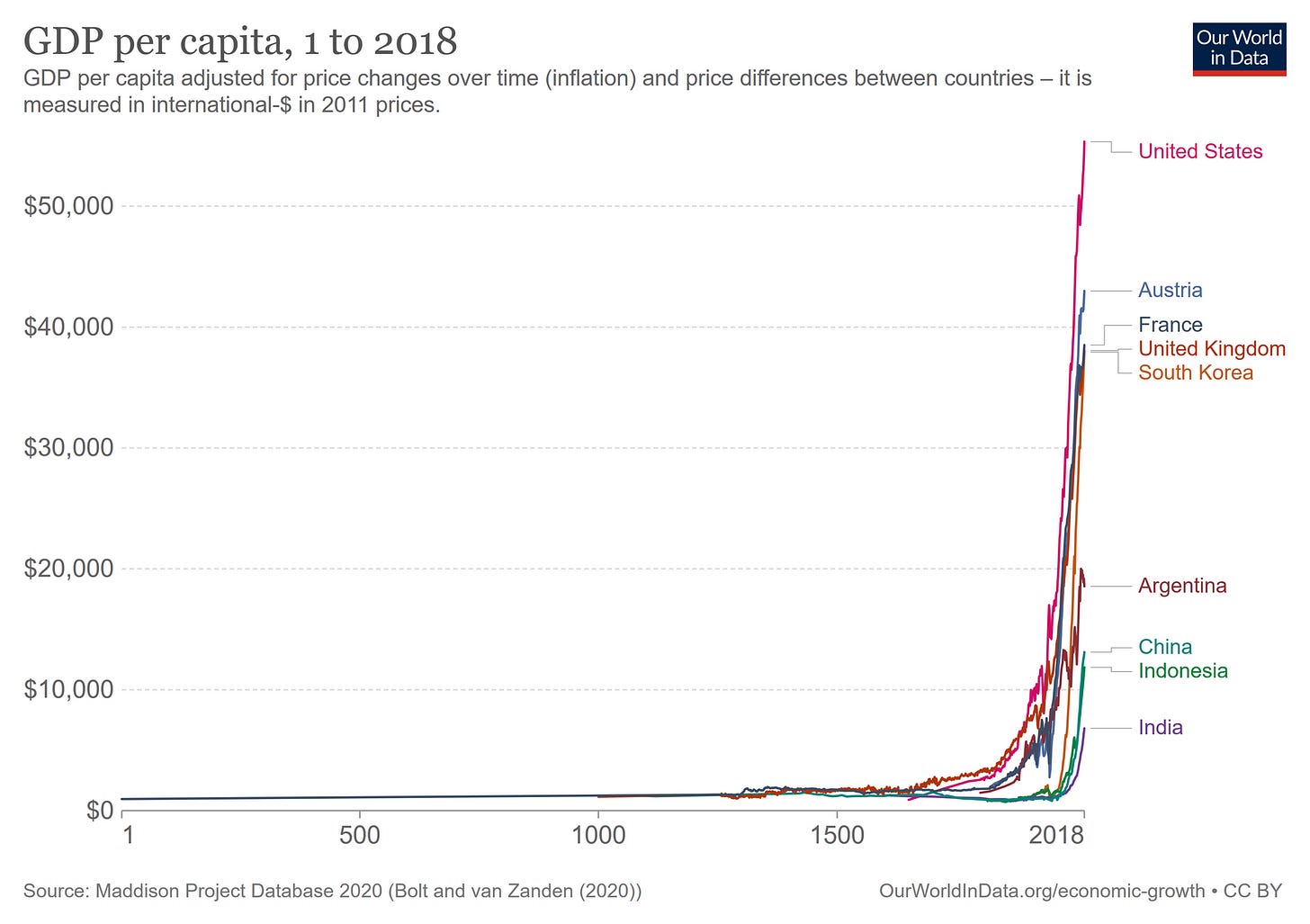

What's remarkable is how closely this pattern mirrors the history of human innovation and economic growth:

Actually, author Matt Clancy also collects some interesting ways of measuring how old ideas in research papers are combined to get the most impactful new ideas. Pretty interesting for me.

The Poincaré Process: How Great Minds Search Combinatorial Space

Henri Poincaré, one of history's greatest mathematicians, provided a remarkable glimpse into how the human mind navigates combinatorial spaces in his essay on mathematical creation:

"One evening, contrary to my custom, I drank black coffee and could not sleep. Ideas rose in crowds; I felt them collide until pairs interlocked, so to speak, making a stable combination. By the next morning I had established the existence of a class of Fuchsian functions, those which come from the hypergeometric series; I had only to write out the results, which took but a few hours."

Poincaré recognized that successful innovation requires navigating the vast combinatorial landscape efficiently:

"To invent, I have said, is to choose; but the word is perhaps not wholly exact. It makes one think of a purchaser before whom are displayed a large number of samples, and who examines them, one after the other, to make a choice. Here the samples would be so numerous that a whole lifetime would not suffice to examine them. This is not the actual state of things. The sterile combinations do not even present themselves to the mind of the inventor. Never in the field of his consciousness do combinations appear that are not really useful, except some that he rejects but which have to some extent the characteristics of useful combinations."

This description matches precisely what we hope AI systems might accomplish—efficiently navigating the combinatorial space to identify promising combinations that humans might miss.

Conclusion: The Undiscovered Country of Scientific Knowledge

We have already experienced many forms of automation of the innovation pipeline:

word processing automated certain typesetting tasks associated with writing up our results

statistical packages automate statistical analyses that used to be performed by hand or by writing custom code

google has “automated” walking the library stacks and flipping through old journals

Elicit automates many parts of the literature review process

AphaFold automates the discovery of the 3D structure of proteins

Automated theorem proving may do just what the name implies.

The exponential growth of scientific literature means that no individual researcher can master all relevant knowledge even within narrow specializations. The life sciences alone account for over 37 million papers in the OpenAlex bibliographic database—the most of any subject. Within this vast ocean of knowledge, countless valuable connections likely remain hidden.

The ultimate question isn't whether AI can accelerate science, but whether its cognitive resources can scale fast enough to keep pace with the explosive growth of combinatorial possibilities.

AI tools that help us navigate and connect knowledge across domains won't replace human scientists but will become their essential companions in exploration. The future belongs not to those who blindly trust AI nor to those who reject it entirely, but to those who learn to dance with these new tools—leveraging their strengths while compensating for their weaknesses through human creativity, critical thinking, and experimental validation.

Large Language Models (LLMs), such Deep Research, represent a powerful new tool in this combinatorial discovery process. By efficiently processing and connecting concepts across millions of scientific papers, LLMs can identify patterns and relationships that might otherwise remain hidden in our fragmented scientific landscape. Just as Swanson manually connected disparate literature to uncover medical breakthroughs, modern LLMs can perform similar connections at unprecedented scale and speed. However, the most productive approach will likely mirror Poincaré's insight—these tools won't simply generate random combinations but rather help researchers navigate the vast combinatorial space more efficiently, highlighting promising connections worthy of further investigation. In this emerging paradigm, human scientists who develop symbiotic relationships with these AI systems—guiding their exploration while leveraging their pattern-recognition capabilities—will unlock new frontiers of scientific discovery that neither humans nor machines could achieve alone.

Bibliography

How much new knowledge is hidden in scientific text?

Combinatorial innovation and technological progress in the very long run

The Haber Bosch reaction of ammonia synthesis is another good example. Hundreds of catalysts and temperature/pressure conditions were tested until the most suitable ones were found.